docs ready

2

.gitignore

vendored

Normal file

|

|

@ -0,0 +1,2 @@

|

|||

.ipynb_checkpoints

|

||||

build

|

||||

217

docs/Makefile

Normal file

|

|

@ -0,0 +1,217 @@

|

|||

# Makefile for Sphinx documentation

|

||||

#

|

||||

|

||||

# You can set these variables from the command line.

|

||||

SPHINXOPTS =

|

||||

SPHINXBUILD = sphinx-build

|

||||

PAPER =

|

||||

BUILDDIR = build

|

||||

NBCONVERT = ipython nbconvert

|

||||

|

||||

# User-friendly check for sphinx-build

|

||||

ifeq ($(shell which $(SPHINXBUILD) >/dev/null 2>&1; echo $$?), 1)

|

||||

$(error The '$(SPHINXBUILD)' command was not found. Make sure you have Sphinx installed, then set the SPHINXBUILD environment variable to point to the full path of the '$(SPHINXBUILD)' executable. Alternatively you can add the directory with the executable to your PATH. If you don't have Sphinx installed, grab it from http://sphinx-doc.org/)

|

||||

endif

|

||||

|

||||

# Internal variables.

|

||||

PAPEROPT_a4 = -D latex_paper_size=a4

|

||||

PAPEROPT_letter = -D latex_paper_size=letter

|

||||

ALLSPHINXOPTS = -d $(BUILDDIR)/doctrees $(PAPEROPT_$(PAPER)) $(SPHINXOPTS) source

|

||||

# the i18n builder cannot share the environment and doctrees with the others

|

||||

I18NSPHINXOPTS = $(PAPEROPT_$(PAPER)) $(SPHINXOPTS) source

|

||||

|

||||

.PHONY: help

|

||||

help:

|

||||

@echo "Please use \`make <target>' where <target> is one of"

|

||||

@echo " html to make standalone HTML files"

|

||||

@echo " dirhtml to make HTML files named index.html in directories"

|

||||

@echo " singlehtml to make a single large HTML file"

|

||||

@echo " pickle to make pickle files"

|

||||

@echo " json to make JSON files"

|

||||

@echo " htmlhelp to make HTML files and a HTML help project"

|

||||

@echo " qthelp to make HTML files and a qthelp project"

|

||||

@echo " applehelp to make an Apple Help Book"

|

||||

@echo " devhelp to make HTML files and a Devhelp project"

|

||||

@echo " epub to make an epub"

|

||||

@echo " latex to make LaTeX files, you can set PAPER=a4 or PAPER=letter"

|

||||

@echo " latexpdf to make LaTeX files and run them through pdflatex"

|

||||

@echo " latexpdfja to make LaTeX files and run them through platex/dvipdfmx"

|

||||

@echo " text to make text files"

|

||||

@echo " man to make manual pages"

|

||||

@echo " texinfo to make Texinfo files"

|

||||

@echo " info to make Texinfo files and run them through makeinfo"

|

||||

@echo " gettext to make PO message catalogs"

|

||||

@echo " changes to make an overview of all changed/added/deprecated items"

|

||||

@echo " xml to make Docutils-native XML files"

|

||||

@echo " pseudoxml to make pseudoxml-XML files for display purposes"

|

||||

@echo " linkcheck to check all external links for integrity"

|

||||

@echo " doctest to run all doctests embedded in the documentation (if enabled)"

|

||||

@echo " coverage to run coverage check of the documentation (if enabled)"

|

||||

|

||||

.PHONY: clean

|

||||

clean:

|

||||

rm -rf $(BUILDDIR)/*

|

||||

|

||||

.PHONY: html

|

||||

html:

|

||||

$(SPHINXBUILD) -vb html $(ALLSPHINXOPTS) $(BUILDDIR)/html

|

||||

@echo

|

||||

@echo "Build finished. The HTML pages are in $(BUILDDIR)/html."

|

||||

|

||||

.PHONY: dirhtml

|

||||

dirhtml:

|

||||

$(SPHINXBUILD) -b dirhtml $(ALLSPHINXOPTS) $(BUILDDIR)/dirhtml

|

||||

@echo

|

||||

@echo "Build finished. The HTML pages are in $(BUILDDIR)/dirhtml."

|

||||

|

||||

.PHONY: singlehtml

|

||||

singlehtml:

|

||||

$(SPHINXBUILD) -b singlehtml $(ALLSPHINXOPTS) $(BUILDDIR)/singlehtml

|

||||

@echo

|

||||

@echo "Build finished. The HTML page is in $(BUILDDIR)/singlehtml."

|

||||

|

||||

.PHONY: pickle

|

||||

pickle:

|

||||

$(SPHINXBUILD) -b pickle $(ALLSPHINXOPTS) $(BUILDDIR)/pickle

|

||||

@echo

|

||||

@echo "Build finished; now you can process the pickle files."

|

||||

|

||||

.PHONY: json

|

||||

json:

|

||||

$(SPHINXBUILD) -b json $(ALLSPHINXOPTS) $(BUILDDIR)/json

|

||||

@echo

|

||||

@echo "Build finished; now you can process the JSON files."

|

||||

|

||||

.PHONY: htmlhelp

|

||||

htmlhelp:

|

||||

$(SPHINXBUILD) -b htmlhelp $(ALLSPHINXOPTS) $(BUILDDIR)/htmlhelp

|

||||

@echo

|

||||

@echo "Build finished; now you can run HTML Help Workshop with the" \

|

||||

".hhp project file in $(BUILDDIR)/htmlhelp."

|

||||

|

||||

.PHONY: qthelp

|

||||

qthelp:

|

||||

$(SPHINXBUILD) -b qthelp $(ALLSPHINXOPTS) $(BUILDDIR)/qthelp

|

||||

@echo

|

||||

@echo "Build finished; now you can run "qcollectiongenerator" with the" \

|

||||

".qhcp project file in $(BUILDDIR)/qthelp, like this:"

|

||||

@echo "# qcollectiongenerator $(BUILDDIR)/qthelp/python-awips.qhcp"

|

||||

@echo "To view the help file:"

|

||||

@echo "# assistant -collectionFile $(BUILDDIR)/qthelp/python-awips.qhc"

|

||||

|

||||

.PHONY: applehelp

|

||||

applehelp:

|

||||

$(SPHINXBUILD) -b applehelp $(ALLSPHINXOPTS) $(BUILDDIR)/applehelp

|

||||

@echo

|

||||

@echo "Build finished. The help book is in $(BUILDDIR)/applehelp."

|

||||

@echo "N.B. You won't be able to view it unless you put it in" \

|

||||

"~/Library/Documentation/Help or install it in your application" \

|

||||

"bundle."

|

||||

|

||||

.PHONY: devhelp

|

||||

devhelp:

|

||||

$(SPHINXBUILD) -b devhelp $(ALLSPHINXOPTS) $(BUILDDIR)/devhelp

|

||||

@echo

|

||||

@echo "Build finished."

|

||||

@echo "To view the help file:"

|

||||

@echo "# mkdir -p $$HOME/.local/share/devhelp/python-awips"

|

||||

@echo "# ln -s $(BUILDDIR)/devhelp $$HOME/.local/share/devhelp/python-awips"

|

||||

@echo "# devhelp"

|

||||

|

||||

.PHONY: epub

|

||||

epub:

|

||||

$(SPHINXBUILD) -b epub $(ALLSPHINXOPTS) $(BUILDDIR)/epub

|

||||

@echo

|

||||

@echo "Build finished. The epub file is in $(BUILDDIR)/epub."

|

||||

|

||||

.PHONY: latex

|

||||

latex:

|

||||

$(SPHINXBUILD) -b latex $(ALLSPHINXOPTS) $(BUILDDIR)/latex

|

||||

@echo

|

||||

@echo "Build finished; the LaTeX files are in $(BUILDDIR)/latex."

|

||||

@echo "Run \`make' in that directory to run these through (pdf)latex" \

|

||||

"(use \`make latexpdf' here to do that automatically)."

|

||||

|

||||

.PHONY: latexpdf

|

||||

latexpdf:

|

||||

$(SPHINXBUILD) -b latex $(ALLSPHINXOPTS) $(BUILDDIR)/latex

|

||||

@echo "Running LaTeX files through pdflatex..."

|

||||

$(MAKE) -C $(BUILDDIR)/latex all-pdf

|

||||

@echo "pdflatex finished; the PDF files are in $(BUILDDIR)/latex."

|

||||

|

||||

.PHONY: latexpdfja

|

||||

latexpdfja:

|

||||

$(SPHINXBUILD) -b latex $(ALLSPHINXOPTS) $(BUILDDIR)/latex

|

||||

@echo "Running LaTeX files through platex and dvipdfmx..."

|

||||

$(MAKE) -C $(BUILDDIR)/latex all-pdf-ja

|

||||

@echo "pdflatex finished; the PDF files are in $(BUILDDIR)/latex."

|

||||

|

||||

.PHONY: text

|

||||

text:

|

||||

$(SPHINXBUILD) -b text $(ALLSPHINXOPTS) $(BUILDDIR)/text

|

||||

@echo

|

||||

@echo "Build finished. The text files are in $(BUILDDIR)/text."

|

||||

|

||||

.PHONY: man

|

||||

man:

|

||||

$(SPHINXBUILD) -b man $(ALLSPHINXOPTS) $(BUILDDIR)/man

|

||||

@echo

|

||||

@echo "Build finished. The manual pages are in $(BUILDDIR)/man."

|

||||

|

||||

.PHONY: texinfo

|

||||

texinfo:

|

||||

$(SPHINXBUILD) -b texinfo $(ALLSPHINXOPTS) $(BUILDDIR)/texinfo

|

||||

@echo

|

||||

@echo "Build finished. The Texinfo files are in $(BUILDDIR)/texinfo."

|

||||

@echo "Run \`make' in that directory to run these through makeinfo" \

|

||||

"(use \`make info' here to do that automatically)."

|

||||

|

||||

.PHONY: info

|

||||

info:

|

||||

$(SPHINXBUILD) -b texinfo $(ALLSPHINXOPTS) $(BUILDDIR)/texinfo

|

||||

@echo "Running Texinfo files through makeinfo..."

|

||||

make -C $(BUILDDIR)/texinfo info

|

||||

@echo "makeinfo finished; the Info files are in $(BUILDDIR)/texinfo."

|

||||

|

||||

.PHONY: gettext

|

||||

gettext:

|

||||

$(SPHINXBUILD) -b gettext $(I18NSPHINXOPTS) $(BUILDDIR)/locale

|

||||

@echo

|

||||

@echo "Build finished. The message catalogs are in $(BUILDDIR)/locale."

|

||||

|

||||

.PHONY: changes

|

||||

changes:

|

||||

$(SPHINXBUILD) -b changes $(ALLSPHINXOPTS) $(BUILDDIR)/changes

|

||||

@echo

|

||||

@echo "The overview file is in $(BUILDDIR)/changes."

|

||||

|

||||

.PHONY: linkcheck

|

||||

linkcheck:

|

||||

$(SPHINXBUILD) -b linkcheck $(ALLSPHINXOPTS) $(BUILDDIR)/linkcheck

|

||||

@echo

|

||||

@echo "Link check complete; look for any errors in the above output " \

|

||||

"or in $(BUILDDIR)/linkcheck/output.txt."

|

||||

|

||||

.PHONY: doctest

|

||||

doctest:

|

||||

$(SPHINXBUILD) -b doctest $(ALLSPHINXOPTS) $(BUILDDIR)/doctest

|

||||

@echo "Testing of doctests in the sources finished, look at the " \

|

||||

"results in $(BUILDDIR)/doctest/output.txt."

|

||||

|

||||

.PHONY: coverage

|

||||

coverage:

|

||||

$(SPHINXBUILD) -b coverage $(ALLSPHINXOPTS) $(BUILDDIR)/coverage

|

||||

@echo "Testing of coverage in the sources finished, look at the " \

|

||||

"results in $(BUILDDIR)/coverage/python.txt."

|

||||

|

||||

.PHONY: xml

|

||||

xml:

|

||||

$(SPHINXBUILD) -b xml $(ALLSPHINXOPTS) $(BUILDDIR)/xml

|

||||

@echo

|

||||

@echo "Build finished. The XML files are in $(BUILDDIR)/xml."

|

||||

|

||||

.PHONY: pseudoxml

|

||||

pseudoxml:

|

||||

$(SPHINXBUILD) -b pseudoxml $(ALLSPHINXOPTS) $(BUILDDIR)/pseudoxml

|

||||

@echo

|

||||

@echo "Build finished. The pseudo-XML files are in $(BUILDDIR)/pseudoxml."

|

||||

263

docs/make.bat

Normal file

|

|

@ -0,0 +1,263 @@

|

|||

@ECHO OFF

|

||||

|

||||

REM Command file for Sphinx documentation

|

||||

|

||||

if "%SPHINXBUILD%" == "" (

|

||||

set SPHINXBUILD=sphinx-build

|

||||

)

|

||||

set BUILDDIR=build

|

||||

set ALLSPHINXOPTS=-d %BUILDDIR%/doctrees %SPHINXOPTS% source

|

||||

set I18NSPHINXOPTS=%SPHINXOPTS% source

|

||||

if NOT "%PAPER%" == "" (

|

||||

set ALLSPHINXOPTS=-D latex_paper_size=%PAPER% %ALLSPHINXOPTS%

|

||||

set I18NSPHINXOPTS=-D latex_paper_size=%PAPER% %I18NSPHINXOPTS%

|

||||

)

|

||||

|

||||

if "%1" == "" goto help

|

||||

|

||||

if "%1" == "help" (

|

||||

:help

|

||||

echo.Please use `make ^<target^>` where ^<target^> is one of

|

||||

echo. html to make standalone HTML files

|

||||

echo. dirhtml to make HTML files named index.html in directories

|

||||

echo. singlehtml to make a single large HTML file

|

||||

echo. pickle to make pickle files

|

||||

echo. json to make JSON files

|

||||

echo. htmlhelp to make HTML files and a HTML help project

|

||||

echo. qthelp to make HTML files and a qthelp project

|

||||

echo. devhelp to make HTML files and a Devhelp project

|

||||

echo. epub to make an epub

|

||||

echo. latex to make LaTeX files, you can set PAPER=a4 or PAPER=letter

|

||||

echo. text to make text files

|

||||

echo. man to make manual pages

|

||||

echo. texinfo to make Texinfo files

|

||||

echo. gettext to make PO message catalogs

|

||||

echo. changes to make an overview over all changed/added/deprecated items

|

||||

echo. xml to make Docutils-native XML files

|

||||

echo. pseudoxml to make pseudoxml-XML files for display purposes

|

||||

echo. linkcheck to check all external links for integrity

|

||||

echo. doctest to run all doctests embedded in the documentation if enabled

|

||||

echo. coverage to run coverage check of the documentation if enabled

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "clean" (

|

||||

for /d %%i in (%BUILDDIR%\*) do rmdir /q /s %%i

|

||||

del /q /s %BUILDDIR%\*

|

||||

goto end

|

||||

)

|

||||

|

||||

|

||||

REM Check if sphinx-build is available and fallback to Python version if any

|

||||

%SPHINXBUILD% 1>NUL 2>NUL

|

||||

if errorlevel 9009 goto sphinx_python

|

||||

goto sphinx_ok

|

||||

|

||||

:sphinx_python

|

||||

|

||||

set SPHINXBUILD=python -m sphinx.__init__

|

||||

%SPHINXBUILD% 2> nul

|

||||

if errorlevel 9009 (

|

||||

echo.

|

||||

echo.The 'sphinx-build' command was not found. Make sure you have Sphinx

|

||||

echo.installed, then set the SPHINXBUILD environment variable to point

|

||||

echo.to the full path of the 'sphinx-build' executable. Alternatively you

|

||||

echo.may add the Sphinx directory to PATH.

|

||||

echo.

|

||||

echo.If you don't have Sphinx installed, grab it from

|

||||

echo.http://sphinx-doc.org/

|

||||

exit /b 1

|

||||

)

|

||||

|

||||

:sphinx_ok

|

||||

|

||||

|

||||

if "%1" == "html" (

|

||||

%SPHINXBUILD% -b html %ALLSPHINXOPTS% %BUILDDIR%/html

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished. The HTML pages are in %BUILDDIR%/html.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "dirhtml" (

|

||||

%SPHINXBUILD% -b dirhtml %ALLSPHINXOPTS% %BUILDDIR%/dirhtml

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished. The HTML pages are in %BUILDDIR%/dirhtml.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "singlehtml" (

|

||||

%SPHINXBUILD% -b singlehtml %ALLSPHINXOPTS% %BUILDDIR%/singlehtml

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished. The HTML pages are in %BUILDDIR%/singlehtml.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "pickle" (

|

||||

%SPHINXBUILD% -b pickle %ALLSPHINXOPTS% %BUILDDIR%/pickle

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished; now you can process the pickle files.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "json" (

|

||||

%SPHINXBUILD% -b json %ALLSPHINXOPTS% %BUILDDIR%/json

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished; now you can process the JSON files.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "htmlhelp" (

|

||||

%SPHINXBUILD% -b htmlhelp %ALLSPHINXOPTS% %BUILDDIR%/htmlhelp

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished; now you can run HTML Help Workshop with the ^

|

||||

.hhp project file in %BUILDDIR%/htmlhelp.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "qthelp" (

|

||||

%SPHINXBUILD% -b qthelp %ALLSPHINXOPTS% %BUILDDIR%/qthelp

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished; now you can run "qcollectiongenerator" with the ^

|

||||

.qhcp project file in %BUILDDIR%/qthelp, like this:

|

||||

echo.^> qcollectiongenerator %BUILDDIR%\qthelp\python-awips.qhcp

|

||||

echo.To view the help file:

|

||||

echo.^> assistant -collectionFile %BUILDDIR%\qthelp\python-awips.ghc

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "devhelp" (

|

||||

%SPHINXBUILD% -b devhelp %ALLSPHINXOPTS% %BUILDDIR%/devhelp

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "epub" (

|

||||

%SPHINXBUILD% -b epub %ALLSPHINXOPTS% %BUILDDIR%/epub

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished. The epub file is in %BUILDDIR%/epub.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "latex" (

|

||||

%SPHINXBUILD% -b latex %ALLSPHINXOPTS% %BUILDDIR%/latex

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished; the LaTeX files are in %BUILDDIR%/latex.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "latexpdf" (

|

||||

%SPHINXBUILD% -b latex %ALLSPHINXOPTS% %BUILDDIR%/latex

|

||||

cd %BUILDDIR%/latex

|

||||

make all-pdf

|

||||

cd %~dp0

|

||||

echo.

|

||||

echo.Build finished; the PDF files are in %BUILDDIR%/latex.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "latexpdfja" (

|

||||

%SPHINXBUILD% -b latex %ALLSPHINXOPTS% %BUILDDIR%/latex

|

||||

cd %BUILDDIR%/latex

|

||||

make all-pdf-ja

|

||||

cd %~dp0

|

||||

echo.

|

||||

echo.Build finished; the PDF files are in %BUILDDIR%/latex.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "text" (

|

||||

%SPHINXBUILD% -b text %ALLSPHINXOPTS% %BUILDDIR%/text

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished. The text files are in %BUILDDIR%/text.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "man" (

|

||||

%SPHINXBUILD% -b man %ALLSPHINXOPTS% %BUILDDIR%/man

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished. The manual pages are in %BUILDDIR%/man.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "texinfo" (

|

||||

%SPHINXBUILD% -b texinfo %ALLSPHINXOPTS% %BUILDDIR%/texinfo

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished. The Texinfo files are in %BUILDDIR%/texinfo.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "gettext" (

|

||||

%SPHINXBUILD% -b gettext %I18NSPHINXOPTS% %BUILDDIR%/locale

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished. The message catalogs are in %BUILDDIR%/locale.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "changes" (

|

||||

%SPHINXBUILD% -b changes %ALLSPHINXOPTS% %BUILDDIR%/changes

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.The overview file is in %BUILDDIR%/changes.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "linkcheck" (

|

||||

%SPHINXBUILD% -b linkcheck %ALLSPHINXOPTS% %BUILDDIR%/linkcheck

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Link check complete; look for any errors in the above output ^

|

||||

or in %BUILDDIR%/linkcheck/output.txt.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "doctest" (

|

||||

%SPHINXBUILD% -b doctest %ALLSPHINXOPTS% %BUILDDIR%/doctest

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Testing of doctests in the sources finished, look at the ^

|

||||

results in %BUILDDIR%/doctest/output.txt.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "coverage" (

|

||||

%SPHINXBUILD% -b coverage %ALLSPHINXOPTS% %BUILDDIR%/coverage

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Testing of coverage in the sources finished, look at the ^

|

||||

results in %BUILDDIR%/coverage/python.txt.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "xml" (

|

||||

%SPHINXBUILD% -b xml %ALLSPHINXOPTS% %BUILDDIR%/xml

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished. The XML files are in %BUILDDIR%/xml.

|

||||

goto end

|

||||

)

|

||||

|

||||

if "%1" == "pseudoxml" (

|

||||

%SPHINXBUILD% -b pseudoxml %ALLSPHINXOPTS% %BUILDDIR%/pseudoxml

|

||||

if errorlevel 1 exit /b 1

|

||||

echo.

|

||||

echo.Build finished. The pseudo-XML files are in %BUILDDIR%/pseudoxml.

|

||||

goto end

|

||||

)

|

||||

|

||||

:end

|

||||

210

docs/source/about.rst

Normal file

|

|

@ -0,0 +1,210 @@

|

|||

=====

|

||||

About AWIPS II

|

||||

=====

|

||||

|

||||

.. raw:: html

|

||||

|

||||

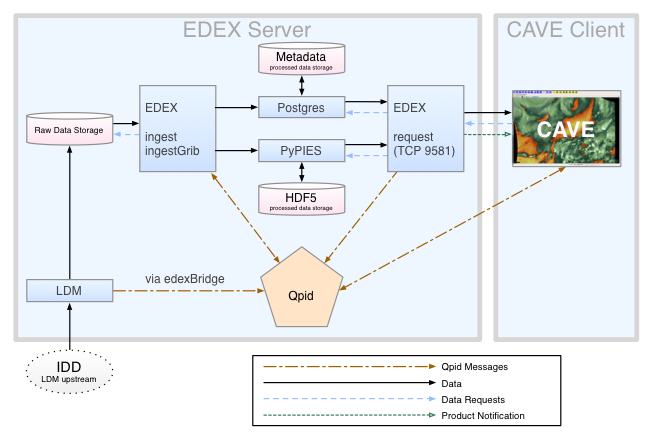

AWIPS II is a weather forecasting display and analysis package being

|

||||

developed by the National Weather Service and Raytheon. AWIPS II is a

|

||||

Java application consisting of a data-rendering client (CAVE, which runs

|

||||

on Red Hat/CentOS Linux and Mac OS X) and a backend data server (EDEX,

|

||||

which runs only on Linux)

|

||||

|

||||

AWIPS II takes a unified approach to data ingest, and most data types

|

||||

follow a standard path through the system. At a high level, data flow

|

||||

describes the path taken by a piece of data from its source to its

|

||||

display by a client system. This path starts with data requested and

|

||||

stored by an `LDM <#ldm>`_ client and includes the decoding of the data

|

||||

and storing of decoded data in a form readable and displayable by the

|

||||

end user.

|

||||

|

||||

The AWIPS II ingest and request processes are a highly distributed

|

||||

system, and the messaging broken `Qpid <#qpid>`_ is used for

|

||||

inter-process communication.

|

||||

|

||||

.. figure:: http://www.unidata.ucar.edu/software/awips2/images/awips2_coms.png

|

||||

:align: center

|

||||

:alt: image

|

||||

|

||||

image

|

||||

|

||||

The primary AWIPS II application for data ingest, processing, and

|

||||

storage is the Environmental Data EXchange (**EDEX**) server; the

|

||||

primary AWIPS II application for visualization/data manipulation is the

|

||||

Common AWIPS Visualization Environment (**CAVE**) client, which is

|

||||

typically installed on a workstation separate from other AWIPS II

|

||||

components.

|

||||

|

||||

In addition to programs developed specifically for AWIPS, AWIPS II uses

|

||||

several commercial off-the-shelf (COTS) and Free or Open Source software

|

||||

(FOSS) products to assist in its operation. The following components,

|

||||

working together and communicating, compose the entire AWIPS II system.

|

||||

|

||||

AWIPS II Python Stack

|

||||

---------------------

|

||||

|

||||

A number of Python packages are bundled with the AWIPS II EDEX and CAVE

|

||||

installations, on top of base Python 2.7.9.

|

||||

|

||||

|

||||

====================== ============== ==============================

|

||||

Package Version RPM Name

|

||||

====================== ============== ==============================

|

||||

Python 2.7.9 awips2-python

|

||||

pyparsing 2.1.0 awips2-python-pyparsing

|

||||

scientific 2.8 awips2-python-scientific

|

||||

pupynere 1.0.13 awips2-python-pupynere

|

||||

tpg 3.1.2 awips2-python-tpg

|

||||

numpy 1.10.4 awips2-python-numpy

|

||||

jimporter 15.1.2 awips2-python-jimporter

|

||||

basemap 1.0.7 awips2-python-basemap

|

||||

cherrypy 3.1.2 awips2-python-cherrypy

|

||||

werkzeug 3.1.2 awips2-python-werkzeug

|

||||

pycairo 1.2.2 awips2-python-pycairo

|

||||

six 1.10.0 awips2-python-six

|

||||

dateutil 2.5.0 awips2-python-dateutil

|

||||

scipy 0.17.0 awips2-python-scipy

|

||||

metpy 0.3.0 awips2-python-metpy

|

||||

pygtk 2.8.6 awips2-python-pygtk

|

||||

**awips** **0.9.2** **awips2-python-awips**

|

||||

shapely 1.5.9 awips2-python-shapely

|

||||

matplotlib 1.5.1 awips2-python-matplotlib

|

||||

cython 0.23.4 awips2-python-cython

|

||||

pil 1.1.6 awips2-python-pil

|

||||

thrift 20080411p1 awips2-python-thrift

|

||||

cartopy 0.13.0 awips2-python-cartopy

|

||||

nose 0.11.1 awips2-python-nose

|

||||

pmw 1.3.2 awips2-python-pmw

|

||||

h5py 1.3.0 awips2-python-h5py

|

||||

tables 2.1.2 awips2-python-tables

|

||||

dynamicserialize 15.1.2 awips2-python-dynamicserialize

|

||||

qpid 0.32 awips2-python-qpid

|

||||

====================== ============== ==============================

|

||||

|

||||

|

||||

EDEX

|

||||

-------------------

|

||||

|

||||

The main server for AWIPS II. Qpid sends alerts to EDEX when data stored

|

||||

by the LDM is ready for processing. These Qpid messages include file

|

||||

header information which allows EDEX to determine the appropriate data

|

||||

decoder to use. The default ingest server (simply named ingest) handles

|

||||

all data ingest other than grib messages, which are processed by a

|

||||

separate ingestGrib server. After decoding, EDEX writes metadata to the

|

||||

database via Postgres and saves the processed data in HDF5 via PyPIES. A

|

||||

third EDEX server, request, feeds requested data to CAVE clients. EDEX

|

||||

ingest and request servers are started and stopped with the commands

|

||||

``edex start`` and ``edex stop``, which runs the system script

|

||||

``/etc/rc.d/init.d/edex_camel``

|

||||

|

||||

CAVE

|

||||

-------------------

|

||||

|

||||

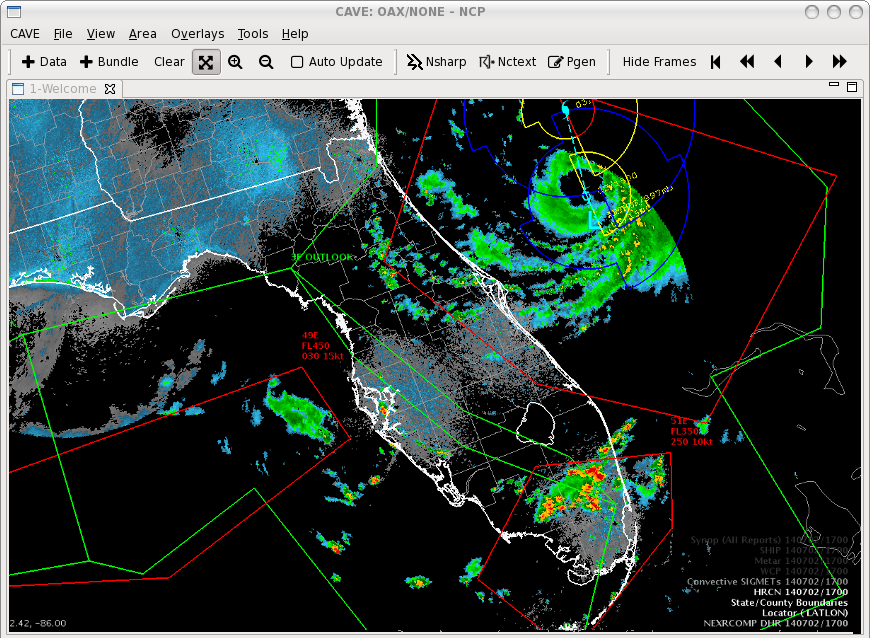

Common AWIPS Visualization Environment. The data rendering and

|

||||

visualization tool for AWIPS II. CAVE contains of a number of different

|

||||

data display configurations called perspectives. Perspectives used in

|

||||

operational forecasting environments include **D2D** (Display

|

||||

Two-Dimensional), **GFE** (Graphical Forecast Editor), and **NCP**

|

||||

(National Centers Perspective). CAVE is started with the command

|

||||

``/awips2/cave/cave.sh`` or ``cave.sh``

|

||||

|

||||

.. figure:: http://www.unidata.ucar.edu/software/awips2/images/Unidata_AWIPS2_CAVE.png

|

||||

:align: center

|

||||

:alt: CAVE

|

||||

|

||||

CAVE

|

||||

|

||||

Alertviz

|

||||

-------------------

|

||||

|

||||

**Alertviz** is a modernized version of an AWIPS I application, designed

|

||||

to present various notifications, error messages, and alarms to the user

|

||||

(forecaster). AlertViz can be executed either independently or from CAVE

|

||||

itself. In the Unidata CAVE client, Alertviz is run within CAVE and is

|

||||

not required to be run separately. The toolbar is also **hidden from

|

||||

view** and is accessed by right-click on the desktop taskbar icon.

|

||||

|

||||

LDM

|

||||

-------------------

|

||||

|

||||

`http://www.unidata.ucar.edu/software/ldm/ <http://www.unidata.ucar.edu/software/ldm/>`_

|

||||

|

||||

The **LDM** (Local Data Manager), developed and supported by Unidata, is

|

||||

a suite of client and server programs designed for data distribution,

|

||||

and is the fundamental component comprising the Unidata Internet Data

|

||||

Distribution (IDD) system. In AWIPS II, the LDM provides data feeds for

|

||||

grids, surface observations, upper-air profiles, satellite and radar

|

||||

imagery and various other meteorological datasets. The LDM writes data

|

||||

directly to file and alerts EDEX via Qpid when a file is available for

|

||||

processing. The LDM is started and stopped with the commands

|

||||

``edex start`` and ``edex stop``, which runs the commands

|

||||

``service edex_ldm start`` and ``service edex_ldm stop``

|

||||

|

||||

edexBridge

|

||||

-------------------

|

||||

|

||||

edexBridge, invoked in the LDM configuration file

|

||||

``/awips2/ldm/etc/ldmd.conf``, is used by the LDM to post "data

|

||||

available" messaged to Qpid, which alerts the EDEX Ingest server that a

|

||||

file is ready for processing.

|

||||

|

||||

Qpid

|

||||

-------------------

|

||||

|

||||

`http://qpid.apache.org <http://qpid.apache.org>`_

|

||||

|

||||

**Apache Qpid**, the Queue Processor Interface Daemon, is the messaging

|

||||

system used by AWIPS II to facilitate communication between services.

|

||||

When the LDM receives a data file to be processed, it employs

|

||||

**edexBridge** to send EDEX ingest servers a message via Qpid. When EDEX

|

||||

has finished decoding the file, it sends CAVE a message via Qpid that

|

||||

data are available for display or further processing. Qpid is started

|

||||

and stopped by ``edex start`` and ``edex stop``, and is controlled by

|

||||

the system script ``/etc/rc.d/init.d/qpidd``

|

||||

|

||||

PostgreSQL

|

||||

-------------------

|

||||

|

||||

`http://www.postgresql.org <http://www.postgresql.org>`_

|

||||

|

||||

**PostgreSQL**, known simply as Postgres, is a relational database

|

||||

management system (DBMS) which handles the storage and retrieval of

|

||||

metadata, database tables and some decoded data. The storage and reading

|

||||

of EDEX metadata is handled by the Postgres DBMS. Users may query the

|

||||

metadata tables by using the termainal-based front-end for Postgres

|

||||

called **psql**. Postgres is started and stopped by ``edex start`` and

|

||||

``edex stop``, and is controlled by the system script

|

||||

``/etc/rc.d/init.d/edex_postgres``

|

||||

|

||||

HDF5

|

||||

-------------------

|

||||

|

||||

`http://www.hdfgroup.org/HDF5/ <http://www.hdfgroup.org/HDF5/>`_

|

||||

|

||||

**Hierarchical Data Format (v.5)** is

|

||||

the primary data storage format used by AWIPS II for processed grids,

|

||||

satellite and radar imagery and other products. Similar to netCDF,

|

||||

developed and supported by Unidata, HDF5 supports multiple types of data

|

||||

within a single file. For example, a single HDF5 file of radar data may

|

||||

contain multiple volume scans of base reflectivity and base velocity as

|

||||

well as derived products such as composite reflectivity. The file may

|

||||

also contain data from multiple radars. HDF5 is stored in

|

||||

``/awips2/edex/data/hdf5/``

|

||||

|

||||

PyPIES (httpd-pypies)

|

||||

-------------------

|

||||

|

||||

**PyPIES**, Python Process Isolated Enhanced Storage, was created for

|

||||

AWIPS II to isolate the management of HDF5 Processed Data Storage from

|

||||

the EDEX processes. PyPIES manages access, i.e., reads and writes, of

|

||||

data in the HDF5 files. In a sense, PyPIES provides functionality

|

||||

similar to a DBMS (i.e PostgreSQL for metadata); all data being written

|

||||

to an HDF5 file is sent to PyPIES, and requests for data stored in HDF5

|

||||

are processed by PyPIES.

|

||||

|

||||

PyPIES is implemented in two parts: 1. The PyPIES manager is a Python

|

||||

application that runs as part of an Apache HTTP server, and handles

|

||||

requests to store and retrieve data. 2. The PyPIES logger is a Python

|

||||

process that coordinates logging. PyPIES is started and stopped by

|

||||

``edex start`` and ``edex stop``, and is controlled by the system script

|

||||

``/etc/rc.d/init.d/https-pypies``

|

||||

303

docs/source/conf.py

Normal file

|

|

@ -0,0 +1,303 @@

|

|||

# -*- coding: utf-8 -*-

|

||||

#

|

||||

# python-awips documentation build configuration file, created by

|

||||

# sphinx-quickstart on Tue Mar 15 15:59:23 2016.

|

||||

#

|

||||

# This file is execfile()d with the current directory set to its

|

||||

# containing dir.

|

||||

#

|

||||

# Note that not all possible configuration values are present in this

|

||||

# autogenerated file.

|

||||

#

|

||||

# All configuration values have a default; values that are commented out

|

||||

# serve to show the default.

|

||||

|

||||

import sys

|

||||

import os

|

||||

|

||||

# If extensions (or modules to document with autodoc) are in another directory,

|

||||

# add these directories to sys.path here. If the directory is relative to the

|

||||

# documentation root, use os.path.abspath to make it absolute, like shown here.

|

||||

#sys.path.insert(0, os.path.abspath('.'))

|

||||

|

||||

# -- General configuration ------------------------------------------------

|

||||

|

||||

# If extensions (or modules to document with autodoc) are in another directory,

|

||||

# add these directories to sys.path here. If the directory is relative to the

|

||||

# documentation root, use os.path.abspath to make it absolute, like shown here.

|

||||

sys.path.insert(0, os.path.abspath('.'))

|

||||

sys.path.insert(0, os.path.abspath('../..'))

|

||||

|

||||

# If your documentation needs a minimal Sphinx version, state it here.

|

||||

#needs_sphinx = '1.0'

|

||||

|

||||

# Add any Sphinx extension module names here, as strings. They can be

|

||||

# extensions coming with Sphinx (named 'sphinx.ext.*') or your custom

|

||||

# ones.

|

||||

extensions = [

|

||||

'sphinx.ext.autodoc',

|

||||

'sphinx.ext.intersphinx',

|

||||

'sphinx.ext.viewcode',

|

||||

'notebook_gen_sphinxext'

|

||||

]

|

||||

|

||||

# Add any paths that contain templates here, relative to this directory.

|

||||

templates_path = ['_templates']

|

||||

|

||||

# The suffix(es) of source filenames.

|

||||

# You can specify multiple suffix as a list of string:

|

||||

# source_suffix = ['.rst', '.md']

|

||||

source_suffix = '.rst'

|

||||

|

||||

# The encoding of source files.

|

||||

#source_encoding = 'utf-8-sig'

|

||||

|

||||

# The master toctree document.

|

||||

master_doc = 'index'

|

||||

|

||||

# General information about the project.

|

||||

project = u'python-awips'

|

||||

copyright = u'2016, Unidata'

|

||||

author = u'Unidata'

|

||||

|

||||

# The version info for the project you're documenting, acts as replacement for

|

||||

# |version| and |release|, also used in various other places throughout the

|

||||

# built documents.

|

||||

#

|

||||

# The short X.Y version.

|

||||

version = u'0.9.2'

|

||||

# The full version, including alpha/beta/rc tags.

|

||||

|

||||

# The language for content autogenerated by Sphinx. Refer to documentation

|

||||

# for a list of supported languages.

|

||||

#

|

||||

# This is also used if you do content translation via gettext catalogs.

|

||||

# Usually you set "language" from the command line for these cases.

|

||||

language = None

|

||||

|

||||

# There are two options for replacing |today|: either, you set today to some

|

||||

# non-false value, then it is used:

|

||||

#today = ''

|

||||

# Else, today_fmt is used as the format for a strftime call.

|

||||

#today_fmt = '%B %d, %Y'

|

||||

|

||||

# List of patterns, relative to source directory, that match files and

|

||||

# directories to ignore when looking for source files.

|

||||

exclude_patterns = []

|

||||

|

||||

# The reST default role (used for this markup: `text`) to use for all

|

||||

# documents.

|

||||

#default_role = None

|

||||

|

||||

# If true, '()' will be appended to :func: etc. cross-reference text.

|

||||

#add_function_parentheses = True

|

||||

|

||||

# If true, the current module name will be prepended to all description

|

||||

# unit titles (such as .. function::).

|

||||

#add_module_names = True

|

||||

|

||||

# If true, sectionauthor and moduleauthor directives will be shown in the

|

||||

# output. They are ignored by default.

|

||||

#show_authors = False

|

||||

|

||||

# The name of the Pygments (syntax highlighting) style to use.

|

||||

pygments_style = 'sphinx'

|

||||

|

||||

# A list of ignored prefixes for module index sorting.

|

||||

#modindex_common_prefix = []

|

||||

|

||||

# If true, keep warnings as "system message" paragraphs in the built documents.

|

||||

#keep_warnings = False

|

||||

|

||||

# If true, `todo` and `todoList` produce output, else they produce nothing.

|

||||

todo_include_todos = False

|

||||

|

||||

|

||||

# -- Options for HTML output ----------------------------------------------

|

||||

|

||||

# The theme to use for HTML and HTML Help pages. See the documentation for

|

||||

# a list of builtin themes.

|

||||

#html_theme = 'alabaster'

|

||||

html_theme = 'sphinx_rtd_theme'

|

||||

# Theme options are theme-specific and customize the look and feel of a theme

|

||||

# further. For a list of options available for each theme, see the

|

||||

# documentation.

|

||||

#html_theme_options = {}

|

||||

|

||||

# Add any paths that contain custom themes here, relative to this directory.

|

||||

#html_theme_path = []

|

||||

|

||||

# The name for this set of Sphinx documents. If None, it defaults to

|

||||

# "<project> v<release> documentation".

|

||||

#html_title = None

|

||||

|

||||

# A shorter title for the navigation bar. Default is the same as html_title.

|

||||

#html_short_title = None

|

||||

|

||||

# The name of an image file (relative to this directory) to place at the top

|

||||

# of the sidebar.

|

||||

#html_logo = None

|

||||

|

||||

# The name of an image file (relative to this directory) to use as a favicon of

|

||||

# the docs. This file should be a Windows icon file (.ico) being 16x16 or 32x32

|

||||

# pixels large.

|

||||

#html_favicon = None

|

||||

|

||||

# Add any paths that contain custom static files (such as style sheets) here,

|

||||

# relative to this directory. They are copied after the builtin static files,

|

||||

# so a file named "default.css" will overwrite the builtin "default.css".

|

||||

html_static_path = ['_static']

|

||||

|

||||

# Add any extra paths that contain custom files (such as robots.txt or

|

||||

# .htaccess) here, relative to this directory. These files are copied

|

||||

# directly to the root of the documentation.

|

||||

#html_extra_path = []

|

||||

|

||||

# If not '', a 'Last updated on:' timestamp is inserted at every page bottom,

|

||||

# using the given strftime format.

|

||||

#html_last_updated_fmt = '%b %d, %Y'

|

||||

|

||||

# If true, SmartyPants will be used to convert quotes and dashes to

|

||||

# typographically correct entities.

|

||||

#html_use_smartypants = True

|

||||

|

||||

# Custom sidebar templates, maps document names to template names.

|

||||

#html_sidebars = {}

|

||||

|

||||

# Additional templates that should be rendered to pages, maps page names to

|

||||

# template names.

|

||||

#html_additional_pages = {}

|

||||

|

||||

# If false, no module index is generated.

|

||||

#html_domain_indices = True

|

||||

|

||||

# If false, no index is generated.

|

||||

#html_use_index = True

|

||||

|

||||

# If true, the index is split into individual pages for each letter.

|

||||

#html_split_index = False

|

||||

|

||||

# If true, links to the reST sources are added to the pages.

|

||||

#html_show_sourcelink = True

|

||||

|

||||

# If true, "Created using Sphinx" is shown in the HTML footer. Default is True.

|

||||

#html_show_sphinx = True

|

||||

|

||||

# If true, "(C) Copyright ..." is shown in the HTML footer. Default is True.

|

||||

#html_show_copyright = True

|

||||

|

||||

# If true, an OpenSearch description file will be output, and all pages will

|

||||

# contain a <link> tag referring to it. The value of this option must be the

|

||||

# base URL from which the finished HTML is served.

|

||||

#html_use_opensearch = ''

|

||||

|

||||

# This is the file name suffix for HTML files (e.g. ".xhtml").

|

||||

#html_file_suffix = None

|

||||

|

||||

# Language to be used for generating the HTML full-text search index.

|

||||

# Sphinx supports the following languages:

|

||||

# 'da', 'de', 'en', 'es', 'fi', 'fr', 'hu', 'it', 'ja'

|

||||

# 'nl', 'no', 'pt', 'ro', 'ru', 'sv', 'tr'

|

||||

#html_search_language = 'en'

|

||||

|

||||

# A dictionary with options for the search language support, empty by default.

|

||||

# Now only 'ja' uses this config value

|

||||

#html_search_options = {'type': 'default'}

|

||||

|

||||

# The name of a javascript file (relative to the configuration directory) that

|

||||

# implements a search results scorer. If empty, the default will be used.

|

||||

#html_search_scorer = 'scorer.js'

|

||||

|

||||

# Output file base name for HTML help builder.

|

||||

htmlhelp_basename = 'python-awipsdoc'

|

||||

|

||||

# -- Options for LaTeX output ---------------------------------------------

|

||||

|

||||

latex_elements = {

|

||||

# The paper size ('letterpaper' or 'a4paper').

|

||||

#'papersize': 'letterpaper',

|

||||

|

||||

# The font size ('10pt', '11pt' or '12pt').

|

||||

#'pointsize': '10pt',

|

||||

|

||||

# Additional stuff for the LaTeX preamble.

|

||||

#'preamble': '',

|

||||

|

||||

# Latex figure (float) alignment

|

||||

#'figure_align': 'htbp',

|

||||

}

|

||||

|

||||

# Grouping the document tree into LaTeX files. List of tuples

|

||||

# (source start file, target name, title,

|

||||

# author, documentclass [howto, manual, or own class]).

|

||||

latex_documents = [

|

||||

(master_doc, 'python-awips.tex', u'python-awips Documentation',

|

||||

u'Unidata', 'manual'),

|

||||

]

|

||||

|

||||

# The name of an image file (relative to this directory) to place at the top of

|

||||

# the title page.

|

||||

#latex_logo = None

|

||||

|

||||

# For "manual" documents, if this is true, then toplevel headings are parts,

|

||||

# not chapters.

|

||||

#latex_use_parts = False

|

||||

|

||||

# If true, show page references after internal links.

|

||||

#latex_show_pagerefs = False

|

||||

|

||||

# If true, show URL addresses after external links.

|

||||

#latex_show_urls = False

|

||||

|

||||

# Documents to append as an appendix to all manuals.

|

||||

#latex_appendices = []

|

||||

|

||||

# If false, no module index is generated.

|

||||

#latex_domain_indices = True

|

||||

|

||||

|

||||

# -- Options for manual page output ---------------------------------------

|

||||

|

||||

# One entry per manual page. List of tuples

|

||||

# (source start file, name, description, authors, manual section).

|

||||

man_pages = [

|

||||

(master_doc, 'python-awips', u'python-awips Documentation',

|

||||

[author], 1)

|

||||

]

|

||||

|

||||

# If true, show URL addresses after external links.

|

||||

#man_show_urls = False

|

||||

|

||||

|

||||

# -- Options for Texinfo output -------------------------------------------

|

||||

|

||||

# Grouping the document tree into Texinfo files. List of tuples

|

||||

# (source start file, target name, title, author,

|

||||

# dir menu entry, description, category)

|

||||

texinfo_documents = [

|

||||

(master_doc, 'python-awips', u'python-awips Documentation',

|

||||

author, 'python-awips', 'One line description of project.',

|

||||

'Miscellaneous'),

|

||||

]

|

||||

|

||||

# Documents to append as an appendix to all manuals.

|

||||

#texinfo_appendices = []

|

||||

|

||||

# If false, no module index is generated.

|

||||

#texinfo_domain_indices = True

|

||||

|

||||

# How to display URL addresses: 'footnote', 'no', or 'inline'.

|

||||

#texinfo_show_urls = 'footnote'

|

||||

|

||||

# If true, do not generate a @detailmenu in the "Top" node's menu.

|

||||

#texinfo_no_detailmenu = False

|

||||

|

||||

# Set up mapping for other projects' docs

|

||||

intersphinx_mapping = {

|

||||

'matplotlib': ('http://matplotlib.org/', None),

|

||||

'metpy': ('http://docs.scipy.org/doc/metpy/', None),

|

||||

'numpy': ('http://docs.scipy.org/doc/numpy/', None),

|

||||

'scipy': ('http://docs.scipy.org/doc/scipy/reference/', None),

|

||||

'pint': ('http://pint.readthedocs.org/en/stable/', None),

|

||||

'python': ('http://docs.python.org', None)

|

||||

}

|

||||

480

docs/source/dev.rst

Normal file

|

|

@ -0,0 +1,480 @@

|

|||

Development Background

|

||||

----------------------

|

||||

|

||||

In support of Hazard Services Raytheon Technical Services has built a

|

||||

generic data access framework that can be called via Java or Python. The

|

||||

data access framework code can be found within the AWIPS Baseline in

|

||||

|

||||

::

|

||||

|

||||

com.raytheon.uf.common.dataaccess

|

||||

|

||||

As of 2016, plugins have been written for grid, radar, satellite, Hydro

|

||||

(SHEF), point data (METAR, SYNOP, Profiler, ACARS, AIREP, PIREP), and maps

|

||||

data. The Factories for each can be found in the

|

||||

following packages (you may need to look at the development baseline to

|

||||

see these):

|

||||

|

||||

::

|

||||

|

||||

com.raytheon.uf.common.dataplugin.grid.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.radar.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.satellite.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.binlightning.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.sfc.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.sfcobs.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.acars.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.ffmp.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.bufrua.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.profiler.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.moddelsounding.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.ldadmesonet.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.binlightning.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.gfe.dataaccess

|

||||

com.raytheon.uf.common.hydro.dataaccess

|

||||

com.raytheon.uf.common.pointdata.dataaccess

|

||||

com.raytheon.uf.common.dataplugin.maps.dataaccess

|

||||

|

||||

Additional data types may be added in the future. To determine what

|

||||

datatypes are supported display the "type hierarchy" associated with the

|

||||

classes

|

||||

|

||||

**AbstractGridDataPluginFactory**,

|

||||

|

||||

**AbstractGeometryDatabaseFactory**, and

|

||||

|

||||

**AbstractGeometryTimeAgnosticDatabaseFactory**.

|

||||

|

||||

The following content was taken from the design review document which is

|

||||

attached and modified slightly.

|

||||

|

||||

Design/Implementation

|

||||

~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

The Data Access Framework is designed to provide a consistent interface

|

||||

for requesting and using geospatial data within CAVE or EDEX. Examples

|

||||

of geospatial data are grids, satellite, radar, metars, maps, river gage

|

||||

heights, FFMP basin data, airmets, etc. To allow for convenient use of

|

||||

geospatial data, the framework will support two types of requests: grids

|

||||

and geometries (points, polygons, etc). The framework will also hide

|

||||

implementation details of specific data types from users, making it

|

||||

easier to use data without worrying about how the data objects are

|

||||

structured or retrieved.

|

||||

|

||||

A suggested mapping of some current data types to one of the two

|

||||

supported data requests is listed below. This list is not definitive and

|

||||

can be expanded. If a developer can dream up an interpretation of the

|

||||

data in the other supported request type, that support can be added.

|

||||

|

||||

Grids

|

||||

|

||||

- Grib

|

||||

- Satellite

|

||||

- Radar

|

||||

- GFE

|

||||

|

||||

Geometries

|

||||

|

||||

- Map (states, counties, zones, etc)

|

||||

- Hydro DB (IHFS)

|

||||

- Obs (metar)

|

||||

- FFMP

|

||||

- Hazard

|

||||

- Warning

|

||||

- CCFP

|

||||

- Airmet

|

||||

|

||||

The framework is designed around the concept of each data type plugin

|

||||

contributing the necessary code for the framework to support its data.

|

||||

For example, the satellite plugin provides a factory class for

|

||||

interacting with the framework and registers itself as being compatible

|

||||

with the Data Access Framework. This concept is similar to how EDEX in

|

||||

AWIPS II expects a plugin developer to provide a decoder class and

|

||||

record class and register them, but then automatically manages the rest

|

||||

of the ingest process including routing, storing, and alerting on new

|

||||

data. This style of plugin architecture effectively enables the

|

||||

framework to expand its capabilities to more data types without having

|

||||

to alter the framework code itself. This will enable software developers

|

||||

to incrementally add support for more data types as time allows, and

|

||||

allow the framework to expand to new data types as they become

|

||||

available.

|

||||

|

||||

The Data Access Framework will not break any existing functionality or

|

||||

APIs, and there are no plans to retrofit existing cosde to use the new

|

||||

API at this time. Ideally code will be retrofitted in the future to

|

||||

improve ease of maintainability. The plugin pecific code that hooks into

|

||||

the framework will make use of existing APIs such as **IDataStore** and

|

||||

**IServerRequest** to complete the requests.

|

||||

|

||||

The Data Access Framework can be understood as three parts:

|

||||

|

||||

- How users of the framework retrieve and use the data

|

||||

- How plugin developers contribute support for new data types

|

||||

- How the framework works when it receives a request

|

||||

|

||||

How users of the framework retrieve and use the data

|

||||

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

When a user of the framework wishes to request data, they must

|

||||

instantiate a request object and set some of the values on that request.

|

||||

Two request interfaces will be supported, for detailed methods see

|

||||

section "Detailed Code" below.

|

||||

|

||||

**IDataRequest**

|

||||

|

||||

**IGridRequest** extends **IDataRequest**

|

||||

|

||||

**IGeometryRequest** extends **IDataRequest**

|

||||

|

||||

For the request interfaces, default implementations of

|

||||

**DefaultGridRequest** and **DefaultGeometryRequest** will be provided

|

||||

to handle most cases. However, the use of interfaces allows for custom

|

||||

special cases in the future. If necessary, the developer of a plugin can

|

||||

write their own custom request implementation to handle a special case.

|

||||

|

||||

After the request object has been prepared, the user will pass it to the

|

||||

Data Access Layer to receive a data object in return. See the "Detailed

|

||||

Code" section below for detailed methods of the Data Access Layer. The

|

||||

Data Access Layer will return one of two data interfaces.

|

||||

|

||||

**IData**

|

||||

|

||||

**IGridData** extends **IData**

|

||||

|

||||

**IGeometryData** extends **IData**

|

||||

|

||||

For the data interfaces, the use of interfaces effectively hides the

|

||||

implementation details of specific data types from the user of the

|

||||

framework. For example, the user receives an **IGridData** and knows the

|

||||

data time, grid geometry, parameter, and level, but does not know that

|

||||

the data is actually a **GFEGridData** vs **D2DGridData** vs

|

||||

**SatelliteGridData**. This enables users of the framework to write

|

||||

generic code that can support multiple data types.

|

||||

|

||||

For python users of the framework, the interfaces will be very similar

|

||||

with a few key distinctions. Geometries will be represented by python

|

||||

geometries from the open source Shapely project. For grids, the python

|

||||

**IGridData** will have a method for requesting the raw data as a numpy

|

||||

array, and the Data Access Layer will have methods for requesting the

|

||||

latitude coordinates and the longitude coordinates of grids as numpy

|

||||

arrays. The python requests and data objects will be pure python and not

|

||||

JEP PyJObjects that wrap Java objects. A future goal of the Data Access

|

||||

Framework is to provide support to python local apps and therefore

|

||||

enable requests of data outside of CAVE and EDEX to go through the same

|

||||

familiar interfaces. This goal is out of scope for this project but by

|

||||

making the request and returned data objects pure python it will not be

|

||||

a huge undertaking to add this support in the future.

|

||||

|

||||

How plugin developers contribute support for new datatypes

|

||||

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

When a developer wishes to add support for another data type to the

|

||||

framework, they must implement one or both of the factory interfaces

|

||||

within a common plugin. Two factory interfaces will be supported, for

|

||||

detailed methods see below.

|

||||

|

||||

**IDataFactory**

|

||||

|

||||

**IGridFactory** extends **IDataFactory**

|

||||

|

||||

**IGeometryFactory** extends **IDataFactory**

|

||||

|

||||

For some data types, it may be desired to add support for both types of

|

||||

requests. For example, the developer of grid data may want to provide

|

||||

support for both grid requests and geometry requests. In this case the

|

||||

developer would write two separate classes where one implements

|

||||

**IGridFactory** and the other implements **IGeometryFactory**.

|

||||

Furthermore, factories could be stacked on top of one another by having

|

||||

factory implementations call into the Data Access Layer.

|

||||

|

||||

For example, a custom factory keyed to "derived" could be written for

|

||||

derived parameters, and the implementation of that factory may then call

|

||||

into the Data Access Layer to retrieve “grid” data. In this example the

|

||||

raw data would be retrieved through the **GridDataFactory** while the

|

||||

derived factory then applies the calculations before returning the data.

|

||||

|

||||

Implementations do not need to support all methods on the interfaces or

|

||||

all values on the request objects. For example, a developer writing the

|

||||

**MapGeometryFactory** does not need to support **getAvailableTimes()**

|

||||

because map data such as US counties is time agnostic. In this case the

|

||||

method should throw **UnsupportedOperationException** and the javadoc

|

||||

will indicate this.

|

||||

|

||||

Another example would be the developer writing **ObsGeometryFactory**

|

||||

can ignore the Level field of the **IDataRequest** as there are not

|

||||

different levels of metar data, it is all at the surface. It is up to

|

||||

the factory writer to determine which methods and fields to support and

|

||||

which to ignore, but the factory writer should always code the factory

|

||||

with the user requesting data in mind. If a user of the framework could

|

||||

reasonably expect certain behavior from the framework based on the

|

||||

request, the factory writer should implement support for that behavior.

|

||||

|

||||

Abstract factories will be provided and can be extended to reduce the

|

||||

amount of code a factory developer has to write to complete some common

|

||||

actions that will be used by multiple factories. The factory should be

|

||||

capable of working within either CAVE or EDEX, therefore all of its

|

||||

server specific actions (e.g. database queries) should go through the

|

||||

Request/Handler API by using **IServerRequests**. CAVE can then send the

|

||||

**IServerRequests** to EDEX with **ThriftClient** while EDEX can use the

|

||||

**ServerRequestRouter** to process the **IServerRequests**, making the

|

||||

code compatible regardless of which JVM it is running inside.

|

||||

|

||||

Once the factory code is written, it must be registered with the

|

||||

framework as an available factory. This will be done through spring xml

|

||||

in a common plugin, with the xml file inside the res/spring folder of

|

||||

the plugin. Registering the factory will identify the datatype name that

|

||||

must match what users would use as the datatype on the **IDataRequest**,

|

||||

e.g. the word "satellite". Registering the factory also indicates to the

|

||||

framework what request types are supported, i.e. grid vs geometry or

|

||||

both.

|

||||

|

||||

An example of the spring xml for a satellite factory is provided below:

|

||||

|

||||

::

|

||||

|

||||

<bean id="satelliteFactory"

|

||||

class="com.raytheon.uf.common.dataplugin.satellite.SatelliteFactory" />

|

||||

|

||||

<bean id="satelliteFactoryRegistered" factory-bean="dataFactoryRegistry" factory-method="register">

|

||||

<constructor-arg value="satellite" />

|

||||

<constructor-arg value="com.raytheon.uf.common.dataaccess.grid.IGridRequest" />

|

||||

<constructor-arg value="satelliteFactory" />

|

||||

</bean>

|

||||

|

||||

How the framework works when it receives a request

|

||||

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

|

||||

|

||||

**IDataRequest** requires a datatype to be set on every request. The

|

||||

framework will have a registry of existing factories for each data type

|

||||

(grid and geometry). When the Data Access Layer methods are called, it

|

||||

will first lookup in the registry for the factory that corresponds to

|

||||

the datatype on the **IDataRequest**. If no corresponding factory is

|

||||

found, it will throw an exception with a useful error message that

|

||||

indicates there is no current support for that datatype request. If a

|

||||

factory is found, it will delegate the processing of the request to the

|

||||

factory. The factory will receive the request and process it, returning

|

||||

the result back to the Data Access Layer which then returns it to the

|

||||

caller.

|

||||

|

||||

By going through the Data Access Layer, the user is able to retrieve the

|

||||

data and use it without understanding which factory was used, how the

|

||||

factory retrieved the data, or what implementation of data was returned.

|

||||

This effectively frees the framework and users of the framework from any

|

||||

dependencies on any particular data types. Since these dependencies are

|

||||

avoided, the specific **IDataFactory** and **IData** implementations can

|

||||

be altered in the future if necessary and the code making use of the

|

||||

framework will not need to be changed as long as the interfaces continue

|

||||

to be met.

|

||||

|

||||

Essentially, the Data Access Framework is a service that provides data

|

||||

in a consistent way, with the service capabilities being expanded by

|

||||

plugin developers who write support for more data types. Note that the

|

||||

framework itself is useless without plugins contributing and registering

|

||||

**IDataFactories**. Once the framework is coded, developers will need to

|

||||

be tasked to add the factories necessary to support the needed data

|

||||

types.

|

||||

|

||||

Request interfaces

|

||||

~~~~~~~~~~~~~~~~~~

|

||||

|

||||

Requests and returned data interfaces will exist in both Java and

|

||||

Python. The Java interfaces are listed below and the Python interfaces

|

||||

will match the Java interfaces except where noted. Factories will only

|

||||

be written in Java.

|

||||

|

||||

**IDataRequest**

|

||||

|

||||

- **void setDatatype(String datatype)** - the datatype name and

|

||||

also the key to which factory will be used. Frequently pluginName

|

||||

such as radar, satellite, gfe, ffmp, etc

|

||||

|

||||

- **void addIdentifier(String key, Object value)** - an identifier the

|

||||

factory can use to determine which data to return, e.g. for grib data

|

||||

key "modelName" and value “GFS40”

|

||||

|

||||